Measuring Impact

Do metrics matter?

Journal, article, or project-level metrics can help you tell a story about the importance or relevance of your research and scholarship, both in the scholarly community and beyond. While they certainly can't tell the whole story—after all, impact is as much about personal interactions, conversations, and collaboration as it is about the number of times someone downloaded your article—they are a useful tool to have when you want to demonstrate why your work matters to funders, hiring committees, tenure and promotion committees, or others.

Journal Impact Factor

CC BY 2.0 Andy Macguire

CC BY 2.0 Andy Macguire

Calculated annually, a journal's impact factor is determined by what percentage of its articles were cited in the two years after their publication. Of course, some articles are more cited than others for reasons other than quality—controversial subject matter, say, or the presence of "citation cabals" looking to boost the purported rank of the journals in which they publish—and the "impact" of an individual journal is by no means evidence of the impact of a researcher's article in it. Nonetheless, some researchers still like to (or are required to) consider a journal's impact factor when choosing where to publish. Clarivate Analytics' Journal Citation Reports, which itself warns against paying too much attention to the impact factor, ranks journals by discipline based on their impact factor, but takes into account journal self-citation, journal performance over time, and the number of times individual articles published in a given journal are cited as well.

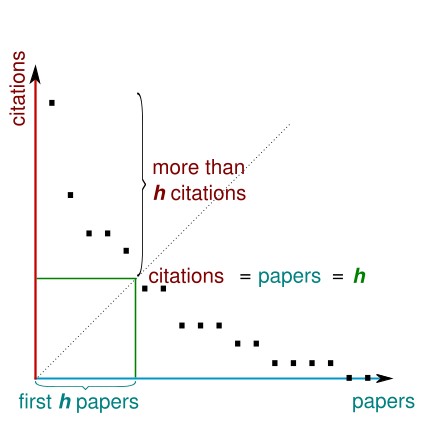

The H-Index

If you've set up a Google Scholar profile, you might notice something called the h-index under your name. Wondering what it is? It's a metric designed to indicate a scholar's productivity (how much they've published) and citability (how often they've been cited)—but it's deeply flawed. "Acceptable" h-indices vary hugely by discipline, and fail to take into account the rich, impactful, but less traditional forms of scholarship with which many academics engage. Further, the "one number to rule them all" logic of the h-index disadvantages junior scholars (who won't have published as much) and scholars in humanities fields, which prize single-authored publications and monographs over co-authored works.

Altmetrics

Alternative metrics, or altmetrics, provide you with an alternative way to track the impact and use of your scholarship online. Besides providing a more complete picture of digital impact than traditional metrics—because they include statistics such as the number of news media and Wikipedia mentions, social media shares, bookmarks, blog posts, and reviews as well as citations—altmetrics also allow you to see results immediately, rather than having to wait for citations to appear in other published works. Several commercial companies provide altmetrics, and many journals display their findings alongside a published article. If you’re curious to see altmetrics in action, try the following:

Sign up for ImpactStory, a tool run by a nonprofit company that shows mentions of your work in tweets, Facebook posts, blog posts, and news sources. You create a profile using your Twitter account, connect your ORCID, and it pulls in information about multiple publications from there to give you a fairly complete picture of the online impact of your work.

Install Altmetric.com’s bookmarklet in your toolbar and click it when you’re on the page of journal article or other item with a DOI to see where it has been mentioned in news articles, blogs, social media, policy documents; and more.

Values-Based Indicators

HuMetricsHSS is an initiative that aims to rethink what we value and reward in academia, particularly on the humanities and social sciences (HSS). The Andrew W. Mellon-funded project advocates for "reverse engineering" rewards systems and starting with discussing, negotatiating, and agreeing upon a set of shared values that individuals, departments, and institutions can use to frame their thinking.

The team of Co-PIs, which includes Columbia's assistant director for scholarly communication, Nicky Agate, developed an initial values framework to help begin to think through their own values systems and how they might be intentionally integrated into all sorts of professional academic work, not just the articles rewarded and "counted" by both traditional metrics and altmetrics alike. Whether a scholar is creating a digital project, putting together an editorial board, designing a conference, or constructing a syllabus, they could ask themselves questions about equity (Who are they citing? How accessible is the work? Are they encouraging digital work that focuses on underrepresented voices?), openness (Are they sharing failures as well as succes? Are there open-access versions of referenced work available? When ethicially appropriate, is data being made open and licensed for others to use? Are they using open-source software?), collegiality (Are they citing colleagues and students who have helped them think through their ideas? Are they providing constructive criticism when evaluating peers, or trying to take others down?), quality (Are the results of their research reproducible? Do they value projects that push boundaries and risk failure?) and community (Are they engaging beyond their discipline/campus/political spectrum/classroom? Are they collaborating, building networks, fostering new areas of interdisciplinary expertise and new forms of knowledge production?).

The questions might change according to institutional or personal context, but the broad categories of interrogation would remain the same. The idea is that an individual scholar would be able to talk about the intentionality and values behind their work in conversations with colleagues and administrators, but—crucially—also that a values-based framework would already have been established institutionally as something of value.